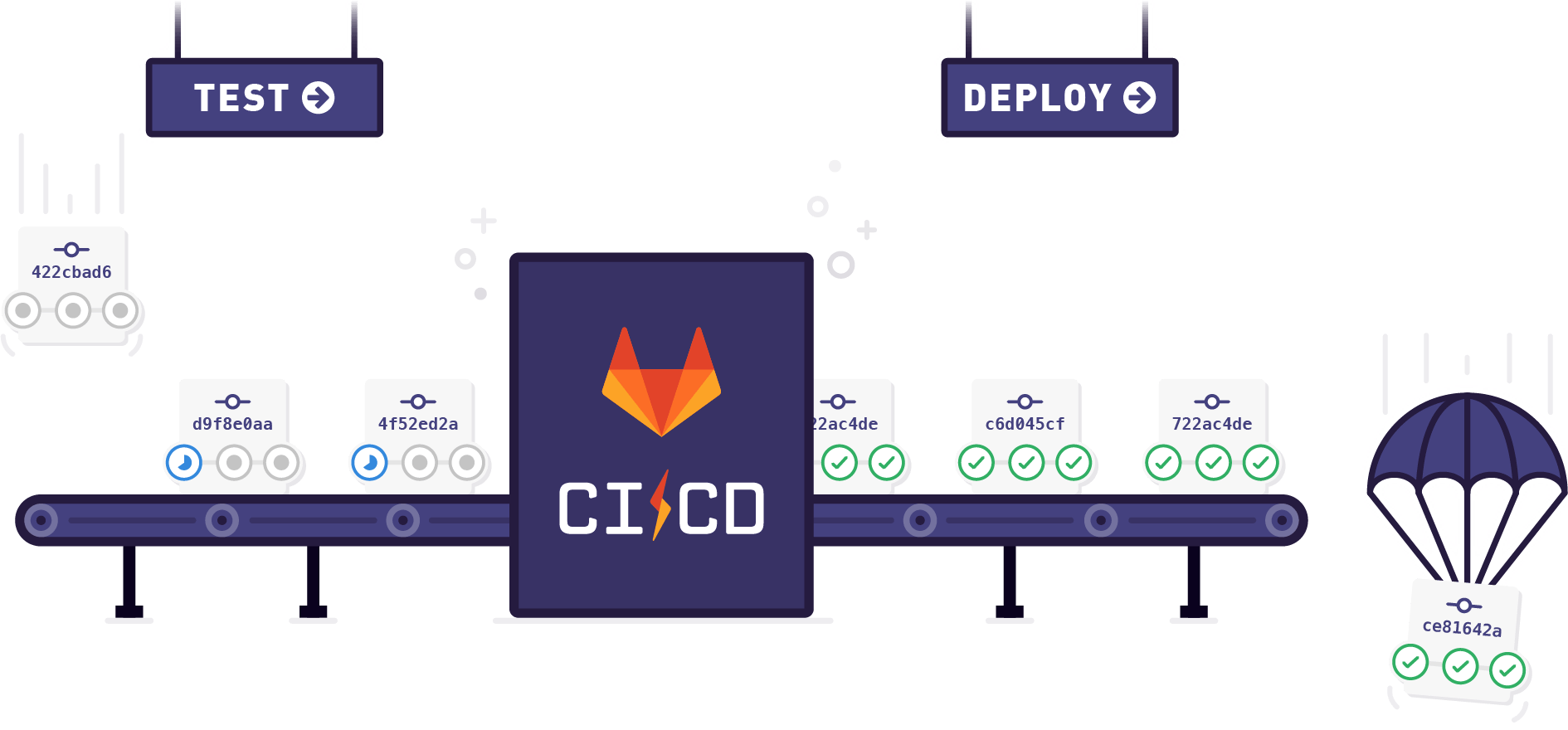

GitLab has introduced CI/CD services since version 8.0. If you are familiar with the concept of CI, then GitLab CI could be (somehow) explained as a built-in Travis CI service for GitHub. But if you are not, then GitLab CI could manage automation for building, testing, deploying and releasing projects.

Achitect and Terms

GitLab CI is deeply integrated with GitLab. In GitLab, each project will has its own CI configuration which is defined in .gitlab.yml in the root directory. After CI is properly configured, pipelines will be triggered to finish automation. One pipeline is relevant to one git commit, but not all the committing, MRs or other actions would trigger a pipepine. It is according to the rules set in the configuration file to trigger one.

Every pipeline need a runner to run on. A project could be configured with one or more runners, and one runner could handle one or more pipelines. After the pipeline is finished, the results will be reported then could be further integrated with merge requests operation and more.

Configure Runners

Runners could be configured by instance administrators, group owners and project owners. By the source of a runner, it could be neither a shared runner, a group (shared) runner or a specified runner.

If one or more shared runner are enabled for your project, it would be the easiest way to enable those runners and go ahead to configuring CI settings. But if more runners are needed (for performance or special environment), you can deploy them on native systems or Docker according to offical documents.

Runners could be labelled with multiple tags. Different tasks could specify runners with different tags to satisfy its runtime environment.

Create CI Files

Create CI Jobs

GitLab CI integration will automatically create pipelines for each commit, merge request, etc.. Each pipeline consists of several stages. Each stage consists of several jobs. We will starting with the minimum unit, a job.

A job defines lines of scripts to run. These scripts could be used to build, deploy, pack or any other tasks. A simple job could be defined in YAML format.

job_name:

script:

- uname -a

- bundle exec rspec

This will execute 2 commands in order. A job could have its own name, and all the jobs are named.

Jobs could be also executed in docker images with services. You could specify the docker image and related services to use on the top level of the CI configuration file.

image: ruby:2.2

services:

- postgres:9.3

before_script:

- bundle install

test:

script:

- bundle exec rake specBy specfying different image key in jobs, they can run in different environment respectively.

Understand Stages and Parallelization

In some pipelines, jobs could be divided into groups and executed parallelly. This benefits the speed of the automation process. Stages are highly customizable and defined by stages keyword. There are 3 pre-defined stages.

# ....

stages:

- build

- test

- deploy

# ....The number and name of stages could be changed at any time. These stages will be executed one at a time and in order. Inside every stage, the dependecies of jobs will be analyzed by GitLab automatically and executed parallelly to save time. Once all the jobs in current stage are done and successful, the pipeline will move to the next stage.

It is also possible to override some default behavior of how stages are run. To stop a job be triggered automatically on previous stage success, add when: manual to it. For example, this is very useful when building and testing are passed but you want a final confirmation before pushing to production environment.

stages:

- build

- test

- deploy

# ....

deploy_to_prod:

stage: deploy

when: manual

# ....You can pass artifacts between jobs. All the jobs will automatically retrive artifcats from it dependencies.

# ....

build:linux:

stage: build

script: make build:linux

artifacts:

paths:

- binaries/

# ....

test:linux:

stage: test

script: make test:linux

dependencies:

- build:linuxIn this configuration, since test:linux job has a dependecy of build:linux, the artifact of the latter one binaries/ will be passed to the former job under the same path.

Add Some Preparation Steps

No matter a job is to build, test or deploy, a proper environment should be built and used for it. It is viable to build a container image to run the jobs, yet sometimes the environment needs to be built dynamically. Rather than mixing up the preparation steps with the actual jobs, GitLab CI provides before_script and after_script for prepration and cleaning up.

# ....

job:

before_script:

- execute this instead of global before script

script:

- my command

after_script:

- execute this after my script

# ....You can also set a default script that is enabled for all the jobs.

# ....

default:

before_script:

- global before script

after_script:

- global after script

# ....Note that these default scripts would be overwritten by the scripts defined in a job.

Pipelines for Merge Requests

A recent change of GitLab 11.9 introduces pipelines for merge requests. This allows us to apply selected jobs to merge requests.

As of GitLab 11.10, pipelines for merge requests require GitLab Runner 11.9 or higher due to the recent refspecs changes.

To configure a job to run only for merge requests is simple.

syntax_test:

script:

- ...

only:

- merge_requestsBut if the merge requests are coming from a froked project, there is something else to be noticed.

Currently, those pipelines are created in a forked project, not in the parent project. This means you cannot completely trust the pipeline result, because, technically, external contributors can disguise their pipeline results by tweaking their GitLab Runner in the forked project.

Combined the best practice would be configure your selected jobs like linting for merge requests, as well as do a full check on master branch before deploying to product environment. Automation helps a lot, but manual review can also prevent dangers from happening.

Happy CI/CD, happy DevOps!