Abstract

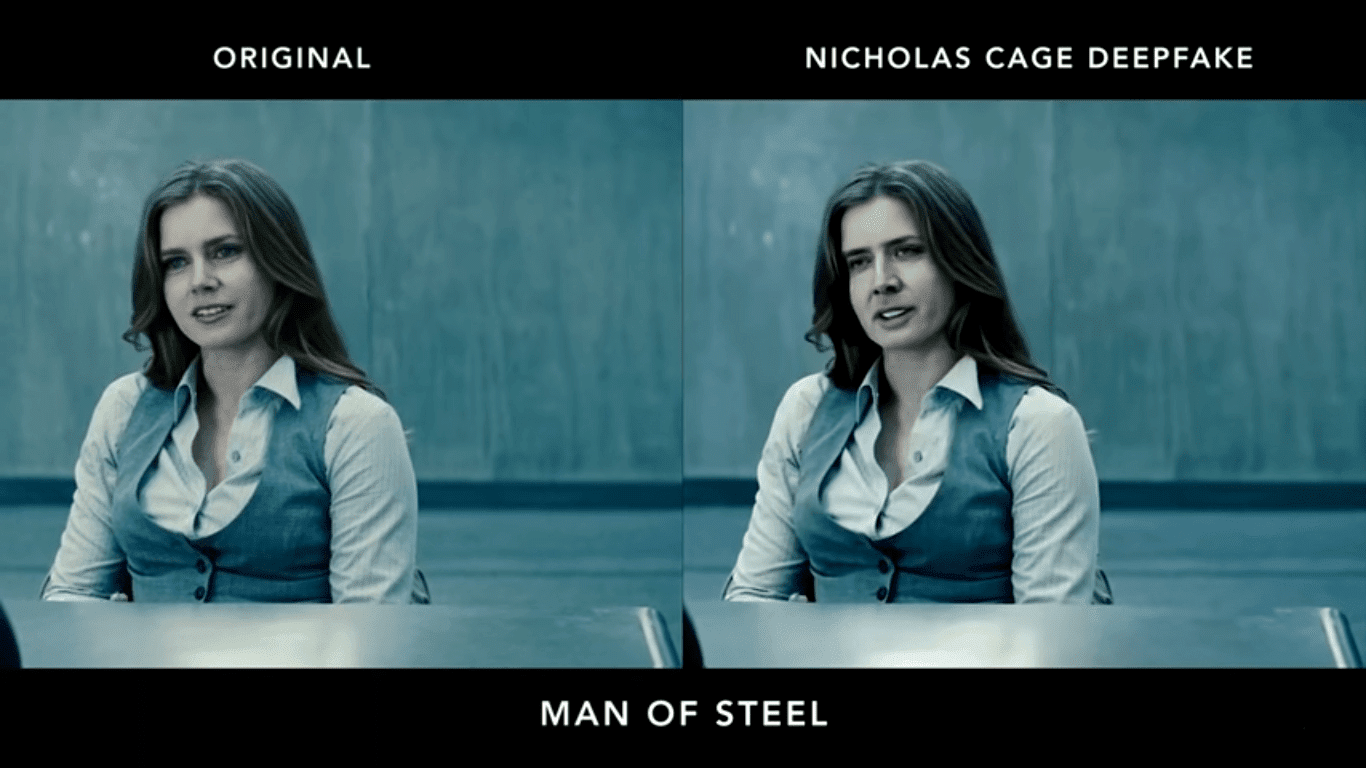

In 2017, Reddit user "deepfakes" posted several porn videos on the Internet which were not real, having been created with artificial intelligence. Deepfake is a portmanteau of "deep learning" and "fake", and a deep learning technique known as the generative adversarial network (GAN) is used to manipulate fake media such as images and videos. As the manipulating technique developed, detectors which detect forgey of images and videos are also boosted in literature. In this post, I will introduce the development of media detection techniques which existed in 2018 and flourished nearly.

1. Fake Media Creation

Before 2019 there were at least two kinds of techniques to replace the face of a targeted person Deepfake and Face2Face. Deepfake is used by the aforementioned fake porn videos but has not been published and Face2Face has been published and has some advanced versions.

1.1.Deepfake

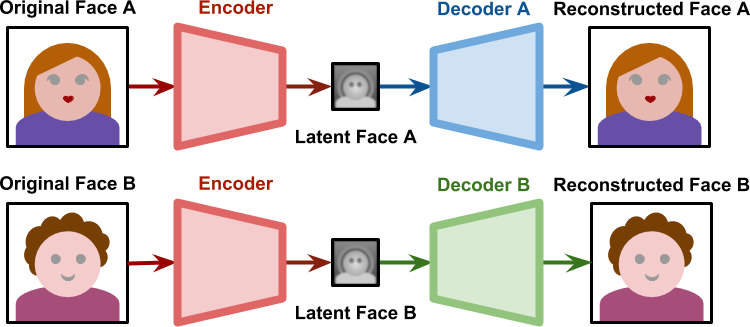

The core concept is parallel training of two auto-encoders. Research has shown that raw images can have excessive dimensionality, and the auto-encoder has been used to reduct the dimensionality and compact representations of images as shown in Figure the latent face.

The latent face is then decoded by two different decoders and reconstructs the face.

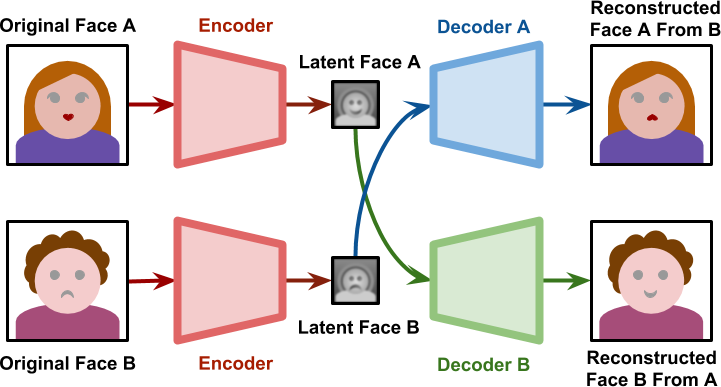

Once the encoder and decoders have been optimized, latent face A can be decoded by decoder B, and merge face A into the B's style.

Encoder shares the same weight while decoders do not. We can regard this as the encoder cleaning all the style of one's face such as the hair color, eyes size, etc. and the latent face only remains the structure of it. The decoders then paint a new style on the latent face to endow it with different identities.

1.2.Face2Face

In [1], the author presents a new approach to reenact the monocular target video sequence. In terms of monoculars, it means that there is only one face in the video. This algorithm has two advantages which are realistic and real-time.

1.3.Fake Videos

From above, I have introduced some techniques to manipulate the images and manipulating videos just adds distilling of frames and then repeats for every frame by face swapping operation.

Manipulating videos in a frame-level can have several flaws [2]:

- Face detector only extracts the face region which leads to inconsistency of skin color

- Face swapping frame-by-frame is not aware of the previously generated face and creates temporal anomalies. For example: inconsistency illuminants between frame leads to flickering phenomenon.

1.4.Fake Benchmark

There are two fake benchmarks that are widely used today and they are FaceForensics and VidTIMIT.

FaceForensics is generated by Face2Face. It consists of more than 500,000 frames containing faces from 1004 videos. This dataset contains two versions: source-to-target and self-reenactment.

1.5.Some Other Face Swapping Algorithms

Face replacement can be divided into two kinds according to target identity the algorithm uses. One is face swapping and the other is face generating. Face swapping uses a large face library as the face target while face generating uses style matrice to transfer the source face to the target one.

1.5.1.Face Swapping: Automatically Replacing Faces in Photographs[5]

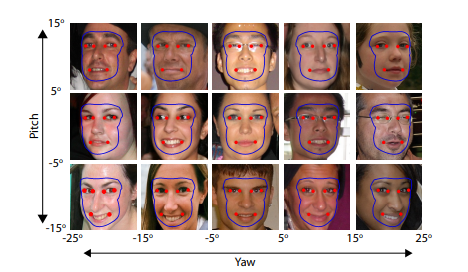

[5] is a kind of face swapping. Dmitri et al. divides the face into 15 different bins according to the coordinates of the face which is yaw and pitch. In case that you do not familiar with the polar coordinates, yaw is when you roll your head on the x-y plane and pitch is when you roll your head on the x-z plane and the coordinates yaw and pitch is got by software. They then make the mirror image and double the face library.

To have a more precious result they define a generic face orientation in each bin. For example, the generic face orientation of the bin -5 to 5 yaw and -15 to -5 pitch is 0 in yaw and-10 in pitch. They then transform each image into a generic orientation with an affine transformation. With a similar operation we can figure out which bin the input image belongs to.

2.Fake Media Detection

Not only does fake video generation depend on fake image, but also detecting each frame of the video can be an effective and efficient method to distinguish fake video from real one. For the videos, there will be additional temporal anomaly that needs to be detected. Because of the similiarity between image and video detection, we can analyze the forgery detection techniques together.

2.1.Traditional Media Detection

Media forgery detection techniques have been well developed since 1990, and traditional detectors are based on two factors [3] :

- Externally additive signal (i.e., watermark)

- Intrinsic statistical information (i.e., local noise, double JPEG localization, CFA pattern, illumination)

The differences between traditional tampering and Deepfake are:

| Detection | Traditional | Deepfake |

|---|---|---|

| Method | always has a source image | no source image but generated from random vector |

| Object | not only manipulate face | face-based |

| Complexity | time-consuming and professional | easy to make and improved along with the technical development |

| Detection | hard to recognize by humans but easy for the computer | sometimes can be recognized by humans but hard for the computer |

With these differences, Deepfake does not have a source image so that it will not have externally additive signal and instrinsic statistical information too. Advanced face swapping algorithms aiming only to face regions can fool both humans and computers, so tampering detection calls for more robust forensics techniques.

2.2.CNN-based Detection

In this section, I will introduce the CNN-based detection method with a table.

References

[1] Face2Face: Real-time Face Capture and Reenactment of RGB Videos.

[2] Deepfake Video Detection Using Recurrent Neural Networks.

[3] Deep Fake Image Detection based on Pairwise Learning.

[4] Two-Stream Neural Networks for Tampered Face Detection.

[5] Face Swapping: Automatically Replacing Faces in Photographs.